Import BigDump into a large MySQL database

BigDump is a small tool that can help you import a large number of MySQL databases. Generally speaking , there are two simpler ways to import MySQL databases. The first is to import directly through commands, which is the fastest and most convenient. It is a standard practice. Another common way to use phpmyadmin is when you are unfamiliar with commands or unable to modify the php.ini file. When the MySQL database is not very large, the practical phpMyAdmin is sufficient, but When the database is very large, the original design of phpMyAdmin will be limited. At this time, BigDump can be used to assist in the import. This article introduces the simple use of BigDump. You can also import MySQL tables of more than 100MB through the web. [Note 1]

BigDump small file

Current version: BigDump ver. 0.35b (beta) (2013/5/23)

Support system: PHP , MySQL

official website: http://www.ozerov.de/bigdump/

software download: http:/ /www.ozerov.de/bigdump.zip

to decompress the downloaded bigdump.zip file. If your computer does not have decompression software, please refer to 7-zip free file compression software . After decompression, you can see bigdump.php This small file is a script written by AJAX. We only need to modify some small places in it to use it. Open bigdump.php and find these lines.

$db_server is the location of the MySQL database, usually localhost, if your database is on another host, please modify the location yourself, $db_name is the database name, $db_username and $db_password are the database administrator’s accounts Password, after the modification is complete, find the following lines.

$filename is the name of the file to be imported, usually it will be a sql file like this "xxx.sql", $ajax can be left open, so that the AJAX interface can be used during subsequent operations, and $linespersession is used to set each time How many pieces of data are restored. The example shows that 3000 pieces are restored each time. $delaypersession is the rest time between each interval. If you fill in 6000, it means a 6-second rest. Why do we need a rest? Because a lot of CPU resources will be used when restoring or importing MySQL data. If you don’t take a break, the CPU will always be in a high-load working state. However, despite the rest time , the CPU load is still in the entire process of importing large MySQL It is difficult to lower, please adjust it yourself.

BigDump use step two, upload and start importing MySQL

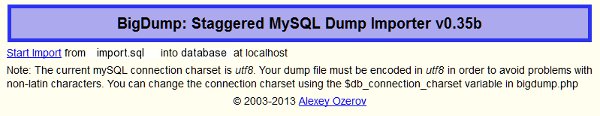

After putting the bigdump.php file modified in step ㄧ and the sql file to be imported in the root directory of the website, open bigdump.php in the browser to see the following initial screen. The way to open bigdump is very simple, starting from the local machine is http://localhost/bigdump.php In this way, please modify localhost according to the current situation.

At this time, confirm the data table to be imported and which database it will go to. If there is no problem, click the Start Import link.

Seeing this screen means that the data table has been successfully imported. This time, 321,220 data were successfully imported, totaling 132.71MB.

Related topics recommended to you

BigDump topic related notes

- According to the editor’s test, BigDump can import a database of more than 100MB, but the CPU is still under high load. If your own host can load, there is no problem. If you use the virtual host, VPS, cloud host leased from the host. .. etc. Before using BigDump, it is recommended to consult the host company whether it is available and the file size to be imported to avoid excessive use of system resources without the host company’s consent.

Post a Comment

0 Comments